James Serras Blog. There are a tremendous amount of Microsoft products that are cloud based for building big data solutions. Its great that there are so many products to choose from, but it does lead to confusion on what are the best products to use for particular use cases and how do all the products fit together. My job as a Microsoft Cloud Solution Architect is to help companies know about all the products and to help them in choosing the best products to use in building their solution. Based on a recent architect design session with a customer I wanted to list the products and use cases that we discussed for their desire to build a big data solution in the cloud focusing on compute and data storage products and not ingestionETL, real time streaming, advanced analytics, or reporting also, only Paa. S solutions are included no Iaa. S Azure Data Lake Store ADLS Is a high throughput distributed file system built for cloud scale storage. It is capable of ingesting any data type from videos and images to PDFs and CSVs. This is the landing zone for all data. It is HDFS compliant, meaning all products that work against HDFS will also work against ADLS. Think of ADLS as the place all other products will use as the source of their data. Solutions Grade 6 Ebook on this page. All data will be sent here including on prem data, cloud based data, and data from Io. T devices. This landing zone is typically called the Data Lake and there are many great reasons for using a Data Lake see Data lake details and Why use a data lake Big data architectures and the data lakeAzure HDInsight HDI Under the covers, HDInsight is simply Hortonworks HDP 2. This document describes installation and version support for the Windows Software Development Kit for Windows 8 Windows SDK, which contains tools and APIs that you. MSDN Magazine Issues and Downloads. Read the magazine online, download a formatted digital version of each issue, or grab sample code and apps. Inside Skype for Business. Tips, Tools, and Insight for Microsoft Skype for Business and Lync from Office Servers and Services MVP Curtis Johnstone. Vs 2012 Remote Debugging Tools For Android

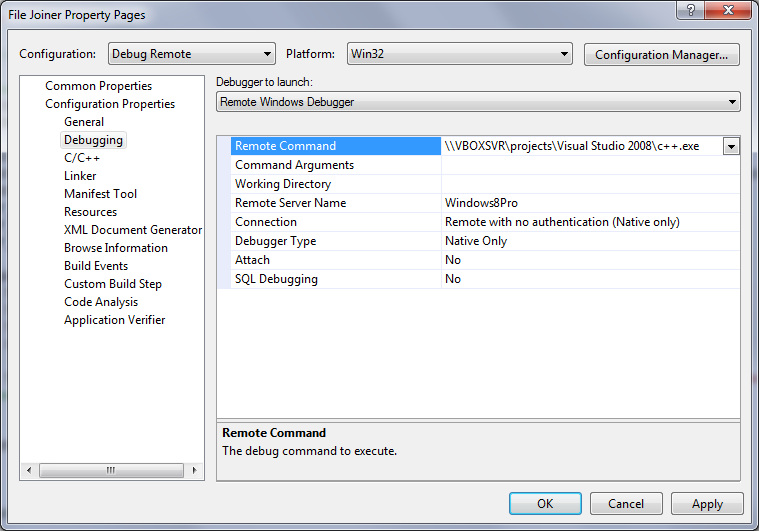

UPDATE 482008. Ive collected the various howtos and gotchas about using Visual Studio 2005 and Visual Studio 2008 with IIS 7. Vi Brief Contents Chapter 9 OllyDbg. Chapter 10 Kernel Debugging. Hadoop Common, YARN, Map. Reduce, Spark, HBase, Storm, and Kafka. You can use any of those or install any other open source products that can all use the data in ADLS HDInsight just connects to ADLS and uses that as its storage sourceAzure Data Lake Analytics ADLA This is a distributed analytics service built on Apache YARN that lets you submit a job to the service where the service will automatically run it in parallel in the cloud and scale to process data of any size. Included with ADLA is U SQL, which has a scalable distributed query capability enabling you to efficiently analyze data whether it be structured CSV or not images in the Azure Data Lake Store and across Azure Blob Storage, SQL Servers in Azure, Azure SQL Database and Azure SQL Data Warehouse. Note that U SQL supports batch queries and does not support interactive queries, and does not handle persistence or indexing. Azure Analysis Services AAS This is a Paa. S for SQL Server Analysis Services SSAS. It allows you to create an Azure Analysis Services Tabular Model i. A key AAS feature is vertical scale out for high availability and high concurrency. It also creates a semantic model over the raw data to make it much easier for business users to explore the data. It pulls data from the ADLS and aggregates it and stores it in AAS. The additional work required to add a cube to your solution involves the time to process the cube and slower performance for ad hoc queries not pre determined, but there are additional benefits of a cube see Why use a SSAS cube Azure SQL Data Warehouse SQL DW This is a SQL based, fully managed, petabyte scale cloud data warehouse. Its highly elastic, and it enables you to set up in minutes and scale capacity in seconds. You can scale compute and storage independently, which allows you to burst compute for complex analytical workloads. It is an MPP technology that shines when used for ad hoc queries in relational format. It requires data to be copied from ADLS into SQL DW but this can be done quickly using Poly. Base. Compute and storage are separated so you can pause SQL DW to save costs see. SQL Data Warehouse reference architecturesAzure Cosmos DB This is a globally distributed, multi model key value, graph, and document database service. It fits into the No. SQL camp by having a non relational model supporting schema on read and JSON documents and working really well for large scale OLTP solutions it also can be used for a data warehouse when used in combination with Apache Spark a later blog. See Distributed Writes and the presentation Relational databases vs Non relational databases. It requires data to be imported into it from ADLS using Azure Data Factory. Azure Search This is a search as a service cloud solution that gives developers APIs and tools for adding a rich full text search experience over your data. You can store indexes in Azure Search with pointers to objects sitting in ADLS. Azure Search is rarely used in data warehouse solutions but if queries are needed such as getting the number of records that contain win, then it may be appropriate. Azure Search supports a pull model that crawls a supported data source such as Azure Blob Storage or Cosmos DB and automatically uploads the data into your index. It also supports the push model for other data sources such as ADLS to programmatically send the data to Azure Search to make it available for searching. Note that Azure Search is built on top of Elastic. Search and uses the Lucene query syntax. Azure Data Catalog This is an enterprise wide metadata catalog that makes data asset discovery straightforward. Its a fully managed service that lets you register, enrich, discover, understand, and consume data sources such as ADLS. It is a single, central place for all of an organizations users to contribute their knowledge and build a community and culture of data. Without using this product you will be in danger having a lot of data duplication and wasted effort. In addition to ADLS, Azure Blob storage can be used instead of ADLS or in combination with it. When comparing ADLS with Blob storage, Blob storage has the advantage of lower cost since there are now three Azure Blob storage tiers Hot, Cool, and Archive, that are all less expensive than ADLS. The advantage of ADLS is that there are no limits on account size and file size Blob storage has a 5 PB account limit and a 4. TB file limit. ADLS is also faster as files are auto shardedchunked where in Blob storage they remain intact. ADLS supports Active Directory while Blob storage supports SAS keys. ADLS also supports Web. HDFS while Blob storage does not it supports WASB which is a thin layer over Blob storage that exposes it as a HDFS file system. Finally, while Blob storage is in all Azure regions, ADLS is only in two US regions East, Central and North Europe other regions coming soon. See Comparing Azure Data Lake Store and Azure Blob Storage. Now that you have a high level understanding of all the products, the next step is to determine the best combination to use to build a solution. If you want to use Hadoop and dont need a relational data warehouse the product choices may look like this Most companies will use a combination of HDI and ADLA. The main advantage with ADLA over HDI is there is nothing you have to manage i. HDI clusters are always running and incurring costs regardless if you are processing data or not, and you can scale individual queries independently of each other instead of having queries fight for resources in the same HDIinsight cluster so predictable vs unpredictable performance. In addition, ADLA is always available so there is no startup time to create the cluster like with HDI. HDI has an advantage in that it has more products available with it i. Kafka and you can customize it i. ADLS you cannot. When submitting a U SQL job under ADLA you specify the resources to use via a Analytics Unit AU. Currently, an AU is the equivalent of 2 CPU cores and 6 GB of RAM and you can go as high as 4. AUs. For HDI you can give more resources to your query by increasing the number of worker nodes in a cluster limited by the region max core count per subscription but you can contact billing support to increase your limit.

UPDATE 482008. Ive collected the various howtos and gotchas about using Visual Studio 2005 and Visual Studio 2008 with IIS 7. Vi Brief Contents Chapter 9 OllyDbg. Chapter 10 Kernel Debugging. Hadoop Common, YARN, Map. Reduce, Spark, HBase, Storm, and Kafka. You can use any of those or install any other open source products that can all use the data in ADLS HDInsight just connects to ADLS and uses that as its storage sourceAzure Data Lake Analytics ADLA This is a distributed analytics service built on Apache YARN that lets you submit a job to the service where the service will automatically run it in parallel in the cloud and scale to process data of any size. Included with ADLA is U SQL, which has a scalable distributed query capability enabling you to efficiently analyze data whether it be structured CSV or not images in the Azure Data Lake Store and across Azure Blob Storage, SQL Servers in Azure, Azure SQL Database and Azure SQL Data Warehouse. Note that U SQL supports batch queries and does not support interactive queries, and does not handle persistence or indexing. Azure Analysis Services AAS This is a Paa. S for SQL Server Analysis Services SSAS. It allows you to create an Azure Analysis Services Tabular Model i. A key AAS feature is vertical scale out for high availability and high concurrency. It also creates a semantic model over the raw data to make it much easier for business users to explore the data. It pulls data from the ADLS and aggregates it and stores it in AAS. The additional work required to add a cube to your solution involves the time to process the cube and slower performance for ad hoc queries not pre determined, but there are additional benefits of a cube see Why use a SSAS cube Azure SQL Data Warehouse SQL DW This is a SQL based, fully managed, petabyte scale cloud data warehouse. Its highly elastic, and it enables you to set up in minutes and scale capacity in seconds. You can scale compute and storage independently, which allows you to burst compute for complex analytical workloads. It is an MPP technology that shines when used for ad hoc queries in relational format. It requires data to be copied from ADLS into SQL DW but this can be done quickly using Poly. Base. Compute and storage are separated so you can pause SQL DW to save costs see. SQL Data Warehouse reference architecturesAzure Cosmos DB This is a globally distributed, multi model key value, graph, and document database service. It fits into the No. SQL camp by having a non relational model supporting schema on read and JSON documents and working really well for large scale OLTP solutions it also can be used for a data warehouse when used in combination with Apache Spark a later blog. See Distributed Writes and the presentation Relational databases vs Non relational databases. It requires data to be imported into it from ADLS using Azure Data Factory. Azure Search This is a search as a service cloud solution that gives developers APIs and tools for adding a rich full text search experience over your data. You can store indexes in Azure Search with pointers to objects sitting in ADLS. Azure Search is rarely used in data warehouse solutions but if queries are needed such as getting the number of records that contain win, then it may be appropriate. Azure Search supports a pull model that crawls a supported data source such as Azure Blob Storage or Cosmos DB and automatically uploads the data into your index. It also supports the push model for other data sources such as ADLS to programmatically send the data to Azure Search to make it available for searching. Note that Azure Search is built on top of Elastic. Search and uses the Lucene query syntax. Azure Data Catalog This is an enterprise wide metadata catalog that makes data asset discovery straightforward. Its a fully managed service that lets you register, enrich, discover, understand, and consume data sources such as ADLS. It is a single, central place for all of an organizations users to contribute their knowledge and build a community and culture of data. Without using this product you will be in danger having a lot of data duplication and wasted effort. In addition to ADLS, Azure Blob storage can be used instead of ADLS or in combination with it. When comparing ADLS with Blob storage, Blob storage has the advantage of lower cost since there are now three Azure Blob storage tiers Hot, Cool, and Archive, that are all less expensive than ADLS. The advantage of ADLS is that there are no limits on account size and file size Blob storage has a 5 PB account limit and a 4. TB file limit. ADLS is also faster as files are auto shardedchunked where in Blob storage they remain intact. ADLS supports Active Directory while Blob storage supports SAS keys. ADLS also supports Web. HDFS while Blob storage does not it supports WASB which is a thin layer over Blob storage that exposes it as a HDFS file system. Finally, while Blob storage is in all Azure regions, ADLS is only in two US regions East, Central and North Europe other regions coming soon. See Comparing Azure Data Lake Store and Azure Blob Storage. Now that you have a high level understanding of all the products, the next step is to determine the best combination to use to build a solution. If you want to use Hadoop and dont need a relational data warehouse the product choices may look like this Most companies will use a combination of HDI and ADLA. The main advantage with ADLA over HDI is there is nothing you have to manage i. HDI clusters are always running and incurring costs regardless if you are processing data or not, and you can scale individual queries independently of each other instead of having queries fight for resources in the same HDIinsight cluster so predictable vs unpredictable performance. In addition, ADLA is always available so there is no startup time to create the cluster like with HDI. HDI has an advantage in that it has more products available with it i. Kafka and you can customize it i. ADLS you cannot. When submitting a U SQL job under ADLA you specify the resources to use via a Analytics Unit AU. Currently, an AU is the equivalent of 2 CPU cores and 6 GB of RAM and you can go as high as 4. AUs. For HDI you can give more resources to your query by increasing the number of worker nodes in a cluster limited by the region max core count per subscription but you can contact billing support to increase your limit.